Nvidia cuda toolkit compatibility install#

When installing the JetPack SDK from the Nvidia SDK Manager, CUDA and its supporting libraries such as cuDNN, cuda-toolkit are automatically installed, and will be ready-to-use after the installation so it will not be necessary to install anything extra to get started with CUDA libraries. While installing from the CUDA repositories allow us to install the latest and greatest version to the date, the wise option would be to stick with either the JetPack SDK or the Debian repositories, where the most stable version of the framework is distributed.

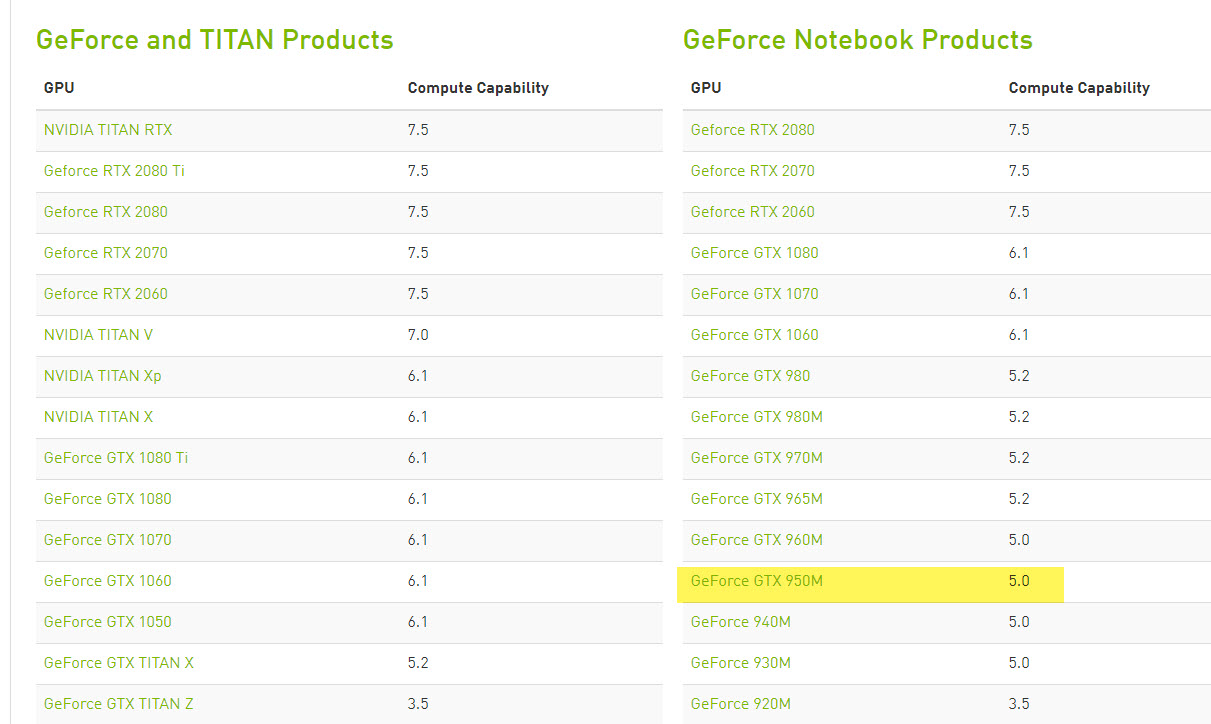

The framework supports highly popular machine learning frameworks such as Tensorflow, Caffe2, CNTK, Databricks, H2O.ai, Keras, MXNet, PyTorch, Theano, and Torch. CUDA is written primarily in C/C++ and there exist additional support for languages like Python and Fortran. Nvidia calls this special framework that enables parallel computing on the GPU the CUDA ( Compute Unified Device Architecture).

However, since the Jetson Nano is designed with special hardware, in order to make the best use of the hardware-accelerated parallel computing using the GPU, a special framework needs to be installed and thereby, machine learning programs can be written using the same.

Nvidia cuda toolkit compatibility series#

In terms of parallel processing, the Jetson Nano easily outperforms the Raspberry Pi series and pretty much any other Single Board Computers which typically only consist of a CPU with one or more cores and lacks a dedicated GPU. The Jetson nano can be used as a general purpose Linux-powered computer, which has advanced uses in machine learning inference and image processing, thanks to its GPU accelerated processor.

0 kommentar(er)

0 kommentar(er)